An important term in the evaluation of an engine is

King Safety. This addresses the fact that the game is lost when the own king is mated no matter what. This also allows an engine to consider material sacrifices when the enemy king comes under pressure by them. So without a decent king safety an engine will provide their opponents good chances for some spectacular mate combinations.

A simple approach is just to count the enemy pieces near the own king. The more pieces the more dangerous it is. This ignores the fact that sliders can be very dangerous from a distance. It is better than nothing but usually not good enough.

A more sophisticated implementation counts the attackers and their attacks against the area of the own king. It also considers defenders. Finally the counter is used as a look-up index into a non linear table. So a lone queen or rook attacking the king is not so bad, but if a knight and a rook join into the attack the king safety score jumps up exponentially.

iCE uses the later approach. So it maintains a counter that is increased if an enemy piece attacks a

square near the king and decreased again if the square is defended by a own piece. The amount of increase and decrease depends on the piece type. In the current release of iCE those values are not tuned. So I just thought of some meaning full values, eg. if the queen attacks increase by 3, if the knight attacks increase by 2, if the pawn defends decrease by 1 etc...

I thought it might make sense to verify that the values I picked here are really good ones. Intuition is usually not the best adviser in chess programming.

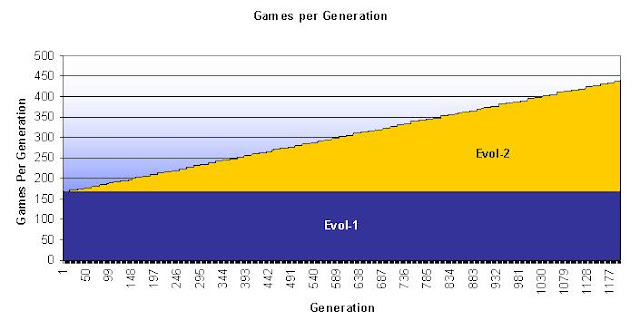

I revived my genetic algorithm tuning framework that was responsible for most of the ELO gain of iCE 1.0 and modified it a bit to just tune the king safety counter values and not all the rest of the weights.

I then started an evolution of 1000 generations with a population size of 64, where the fittest individual is determined by a knock-out tournament within the generation. It ran for almost two weeks.

Here are some impressions:

The ranking table of the individuals of the final (1000.) generation. The 32 genoms that did not survive the initial 2-games round are removed from the list.

Rank Name Elo + - games score oppo. draws

1 ice.05 226 59 59 100 64% 131 23%

3 ice.49 200 116 116 20 53% 191 35%

4 ice.36 163 95 95 32 53% 154 31%

5 ice.60 156 56 56 100 50% 164 31%

6 ice.32 151 169 169 8 56% 127 38%

7 ice.17 144 72 72 56 54% 123 36%

10 ice.25 123 125 125 20 58% 96 15%

12 ice.26 119 169 169 8 44% 152 38%

13 ice.50 118 174 174 8 56% 96 38%

14 ice.33 117 169 169 8 44% 158 38%

16 ice.59 113 115 115 20 48% 131 35%

17 ice.29 111 197 197 8 50% 146 0%

18 ice.04 92 121 121 20 50% 97 20%

20 ice.47 81 93 93 32 53% 65 38%

21 ice.13 81 168 168 8 56% 61 38%

24 ice.43 75 167 167 8 44% 103 38%

26 ice.52 69 90 90 32 47% 89 44%

28 ice.10 66 113 113 20 55% 35 40%

30 ice.40 41 175 175 8 38% 103 25%

32 ice.16 39 78 78 56 51% 47 27%

34 ice.62 31 163 163 8 50% 38 50%

36 ice.08 4 178 178 8 50% 19 25%

37 ice.27 -11 173 173 8 56% -24 38%

39 ice.35 -20 118 118 20 45% 14 30%

42 ice.02 -44 182 182 8 44% 20 13%

48 ice.41 -102 93 93 32 45% -73 41%

49 ice.22 -108 169 169 8 44% -61 38%

50 ice.53 -118 120 120 20 45% -53 30%

52 ice.00 -127 114 114 20 55% -147 40%

54 ice.19 -149 168 168 8 44% -114 38%

55 ice.51 -170 162 162 8 50% -159 50%

58 ice.48 -187 164 164 8 38% -135 50%

Here the winner was ice0.5. The final round was played between the individuals ice.05 and ice.60 and it ended 20 - 11 - 13.

|

| Probability Vector after 100 generations. Most bits haven't decided yet whether to converge towards 0 or 1. |

|

| Probability Vector after 1000 generations. Most bits are fully converged towards 0 or 1. |

The evolution made slow but steady progress. The entropy of the system dropped from 41 to about 10.

Some observations:

My original handpicked values were not so far off in general.

The knight seems more dangerous in attacking the squares in front of the king than the bishop, where as the bishop is a better defender. The most powerful attacking piece is the rook.

Rook and queen are bad defenders.

The situation becomes more dangerous if the enemy has safe checks available, especially from the sliders (bishop, rook and queen). A safe check from a knight is almost worthless.

The threat of a back rank mate is about as dangerous as an additional attacker.

Result:

I played a final 16k games match between the tuned and the original version. It shows that with a very high statistical confidence the new iCE is stronger. Only 10 (selfplay) ELO, but most patches score muss less and I actually haven't added code. I just modified some values. So I decided to count my little project as a success.

Rank Name Elo + - games score oppo. draws

1 ice.tuned 5 4 4 16000 52% -5 36%

3 ice.base -5 4 4 16000 48% 5 36%