This is fundamentally different than my former approach were I measured position solving performance

- It is computationally much more expensive

- It is not reproducible. If the best individual is determined in a generation chances are very high a different individual would be selected if the calculation would be repeated. This is caused by the high degree of randomness involved when playing small amount of games.

- There is no guarantee that the real best individual is winning a generation tournament. But chances are that at least a good one wins.

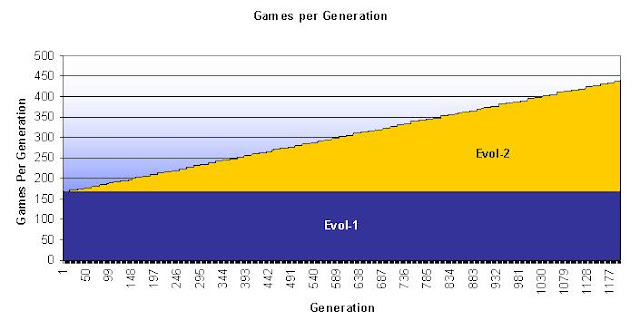

I call them evol-1 and evol-2.

Evol-1 Evol-2

Runtime in hrs 185 363

Generations 1.100 1.200

Total Games 184.800 362.345

EPDs solved* 2.646 2.652

*The number of solved positions out of a test set of 4.109 positions. This number is given to set this GA in relation to the previous test where the number of solved positions was the fitness criteria. Both solutions are better than the un-tuned version that scored only 2.437 points but worse than the version optimized towards solving this set that scored 2.811 points.

|

| Entropy development of evol-1 and evol-2 |

|

| Comparison of the ability to find correct positions in an EPD file |

And finally the real test: A direct round robin comparison between the two and the base version.

And the OSCAR goes to: evol-2

Rank Name Elo + - games score oppo. draws

1 ice.evol-2 89 5 5 12000 58% 35 32%

2 ice.evol-1 69 5 5 12000 54% 44 33%

3 ice.04 0 5 5 12000 39% 79 28%

Looks like all the effort finally paid off, considering also the fact that the base version is also not really a weak engine. It is a bit stronger than the offical iCE 0.3 which is rated 2475 ELO in the CCRL.

Next I maybe tweak manually some of the weights from the final set because some look suspicious. I wonder whether I'm able to score half a point back against the evolution ...

Hi Thomas,

ReplyDeletehow do you achieve to set the parameter for each engine with cutechess-cli?

are you using comfiguration files or are you sending each engine configuration parameter through stdin?

I'm using kind of configuration files. When a generation starts I generate for each individual a file with the corresponding weights. This looks like

Deleteweights_00.cmd

setweight id 2 values 320 45

setweight id 3 values 400 156

setweight id 4 values 536 817

...

iCE implements a UCI option "CommandFile" where I can specify an external file.

cutechess-cli accepts a UCI option in the command line that is sent to the engine after starting it.

So when the matches are played iCE is called with different commandFiles which initializes the weights.

In the cutechess debug it looks then like

687 ice.00(0): setoption name CommandFile value C:\Docs\cpp\tuner\pbil1\Arena\weights_00.cmd

734 >ice.00(0): isready

734 <ice.00(0): Setting weight KNIGHT_MATERIAL [ 2] values to (320, 45)

734 <ice.00(0): Setting weight BISHOP_MATERIAL [ 3] values to (400, 56)

734 <ice.00(0): Setting weight ROOK_MATERIAL [ 4] values to (536, 817)

1234 <ice.00(0): readyok

I haven't understood how do you scale so smoothly the games per generation!

ReplyDeleteActually it is a series of little steps

Deletetask.games = int(2 * pow(2.0, floor(round/2.0)));

if (round > 0) task.games += (task.games * generation) / 500;

if (lastround) task.games += int(generation / 50);

round is the round in the current knock out tournament

task.games is the amount of games played between 2 engines